The rise of artificial intelligence (AI) has been subject to growing concerns over identity verification tools at cryptocurrency exchanges.

With rapidly evolving AI technology, the process of creating deepfake proofs of identity is becoming easier than ever. The concerns about AI-enabled risks in crypto have triggered some prominent industry executives to speak out on the matter.

Changpeng Zhao, CEO and founder of major global exchange Binance, took to Twitter on Aug. 9 to raise the alarm on the use of AI in crypto by bad actors.

“This is pretty scary from a video verification perspective. Don’t send people coins even if they send you a video,” Zhao wrote.

This is pretty scary from a video verification perspective. Don’t send people coins even if they send you a video… ♂️ https://t.co/jvjZGHihku

— CZ Binance (@cz_binance) August 9, 2023

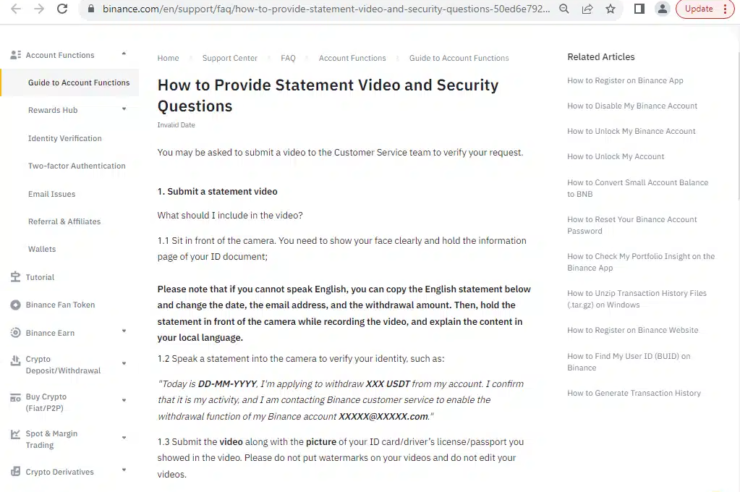

Like many other crypto exchanges, Binance’s internal Know Your Customer (KYC) processes require crypto investors to submit video evidence for processing certain transactions.

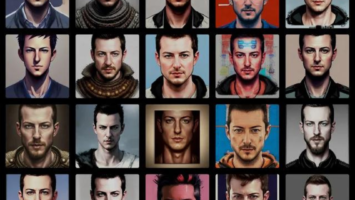

Binance CEO referred to an AI-generated video featuring HeyGen co-founder and CEO Joshua Xu. The video specifically included Xu’s AI-generated avatar, which looks just like real HeyGen CEO and reproduces his facial expressions as well as voice and speech patterns.

“Both of these video clips were 100% AI-generated, featuring my own avatar and voice clone,” Xu noted. He added that HeyGen has been progressing with some massive enhancements to its life-style avatar’s video quality and voice technology to mimic his unique accent and speech patterns.

“This will be soon deployed to production and everyone can try it out,” Xu added.

Once available to the public, the AI tool would allow anyone to create a real life-like digital avatar in just “two minutes,” HeyGen CEO said.

The public exposure to AI generation tools like HeyGen could apparently cause serious identity verification issues for cryptocurrency exchanges like Binance. Like many other exchanges, Binance practices Know-Your-Customer (KYC) measures involving a requirement to send a video featuring the user and certain documents to get access to services or even to withdraw funds from the platform.

Related: AI mentions skyrocket in major tech companies’ Q2 calls

Binance’s statement video specifically requires users to submit the video along with the picture of their identity document like an ID card, driver’s license or passport. The policy requires users to mention the date and certain requests on the video record.

“Please do not put watermarks on your videos and do not edit your videos,” the policy reads.

Binance’s chief security officer Jimmy Su previously warned about AI deepfakes-associated risks as well. In late May, Su argued that the AI tech is getting so advanced that AI deepfakes may soon become undetectable by a human verifier.

Binance and HeyGen did not immediately respond to Cointelegraph’s request for comment. This article will be updated pending new information.

Magazine: AI Eye: AI’s trained on AI content go MAD, is Threads a loss leader for AI data?

Comments (No)